“_________”

Axel on Data Science.

The quality of data is super important, sometimes a matter of life and death. Data breeding can take data distributed in data lakes and help you judge the quality of the data sets.

— Prof. Axel Ngonga

Web semantics, big data scientist, University of Paderborn

Andy: “What kind of music do you like?”

Axel: “All kinds of music. But I really like progressive metal. Do you know Dream Theater?”

Andy: “No.”

Axel: “They’re really cool.”

Andy: “Do you know The War on Drugs?”

Axel: “Is that a band?”

Andy: “Yeah, check them out.”

Before we really get going, let me ask you one simple question about the word “data”: Singular or plural?

Axel — Both. It really depends on the context. Sometimes it’s a matter of personal semantics. Semantics allows us to have this conversation right now. Because I’m using language that you understand, we can convey meaning.

“I want to create a way to take the meaning of things in huge amounts of data and transform them into models such that humans and machines can find some form of lingua franca.”

But your main field of interest isn’t between humans, is it, it’s between man and machine, or maybe I should say machine and man?

Now, machines, it’s a different language, so when you want to communicate with a machine, you have to use a language that the machine understands. My job is to make this interface work. Connecting humans and data through machines, to be precise. So when I say ‘semantics,’ I basically mean that I want to create a way to take the meaning of things in huge amounts of data and transform them into models such that humans and machines can find some form of lingua franca. For machine intelligence and human intelligence to meet and work together, they need to speak the same language. I try to make the semantics explicit.

Machines don’t respond to nuance yet…Right?

Right. Eventually, they will; the interesting thing here is that you can train them to do that. And that means that if I can get humans and machines to start working together, we can actually solve problems. For example, in medicine, we may want to know more about what substances are carcinogenic. It takes a machine to mine the tons of data, to say, these are the ones I’ve found, so, could one with similar characteristics also be a candidate to cause cancer? And the human expert on the other side of the equation would say, most probably not, I’ve worked with this before, the effects are benign.

“For machine intelligence and human intelligence to meet and work together, they need to speak the same language.”

Other than in medicine, how else could semantic web technologies be used?

Agriculture is one area, looking at the different products you spray on plants and what happens then. But also something industrial, like being able to use data to predict when a problem will arise in, say, a printing plant, and most importantly, why. If you use statistical models, you can actually predict that a printer is going to break down. What you don’t frequently have is “the why.”

Once machines and humans start speaking the same natural language, you get totally different types of interactions. I’m interested in machines being able to tell you why they do certain things, why they choose certain data, why they’re basically suggesting to you a certain machine learning model as the model to solve a problem. It’s quite important, when you think about things like the law, for example. I think it was in New York where they looked at why certain people were given the opportunity to post bail and not be incarcerated and other people, not. When humans looked into the machine-learning model, they realized there was quite a strong bias there toward skin color and race.

Axel, what happens if this depth of knowledge falls into the wrong hands; is too much knowledge a dangerous thing, sometimes?

It really depends on the context. I live in Germany, and when you think about the Second World War, a lot of the soldiers said, “I was given orders, I just did what I was told to do,” and they never asked why. But the ‘why question’ is fundamental to make us advance as humans. If we can’t ask why, we cannot guarantee that machines perform in a way that is reliable from the perspective of ethics in human interaction. Machines will just look at the stats, no feelings, nothing. That’s fine, that’s a machine’s job. It is our job as humans to take the decisions of a machine and ask why.

“The ‘why question’ is fundamental to make us advance as humans. If we can’t ask why, we cannot guarantee that machines perform in a way that is reliable from the perspective of ethics in human interaction.”

So, in the end, it will augment humanity—

Absolutely.

Instead of scrunching it.

Exactly. Yeah. You remember when Artificial Intelligence was used to judge beauty, and it identified it as tall with a fair skin tone? Humans can reject the model that came up with that and teach machines that it’s not okay to use race in this way.

Are you working on anything else that could improve the quality of data?

Data breeding. We’re working on that, but it’s not quite ready yet.

Data breeding?

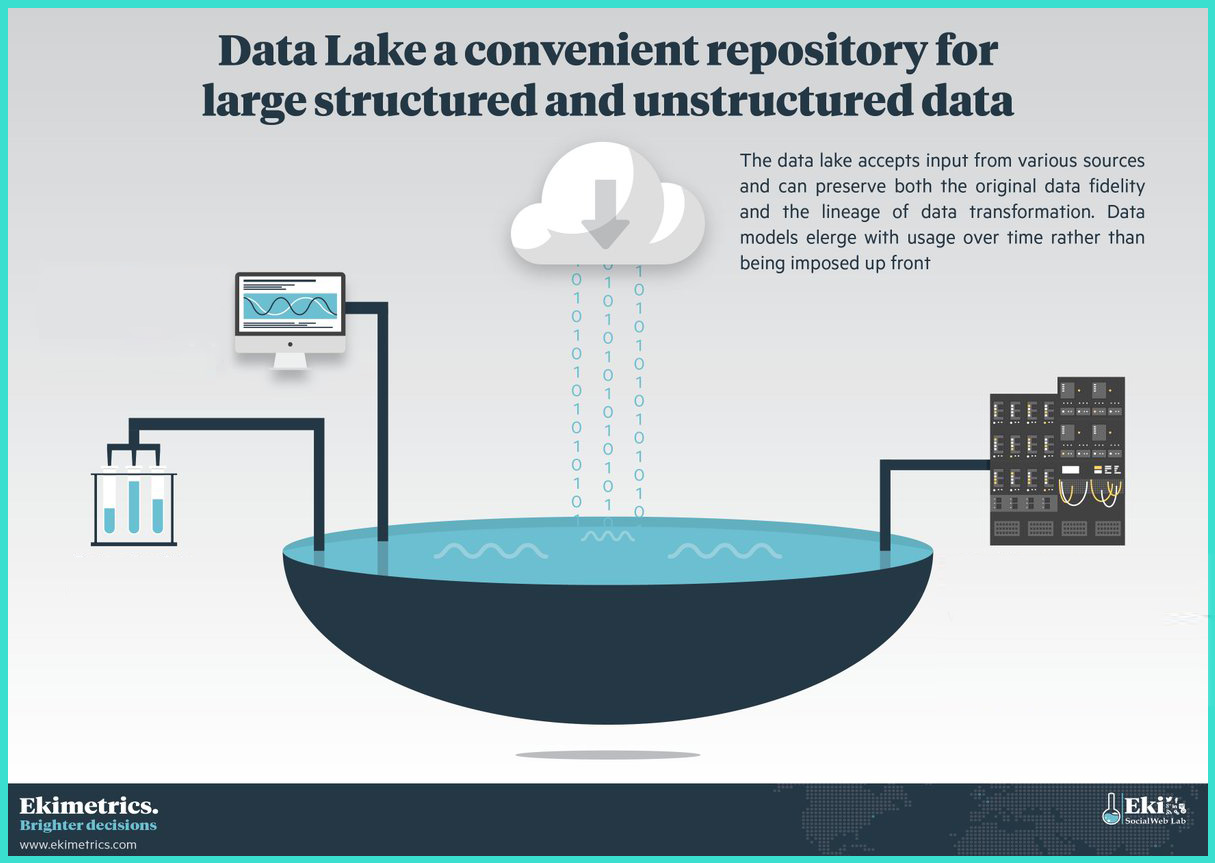

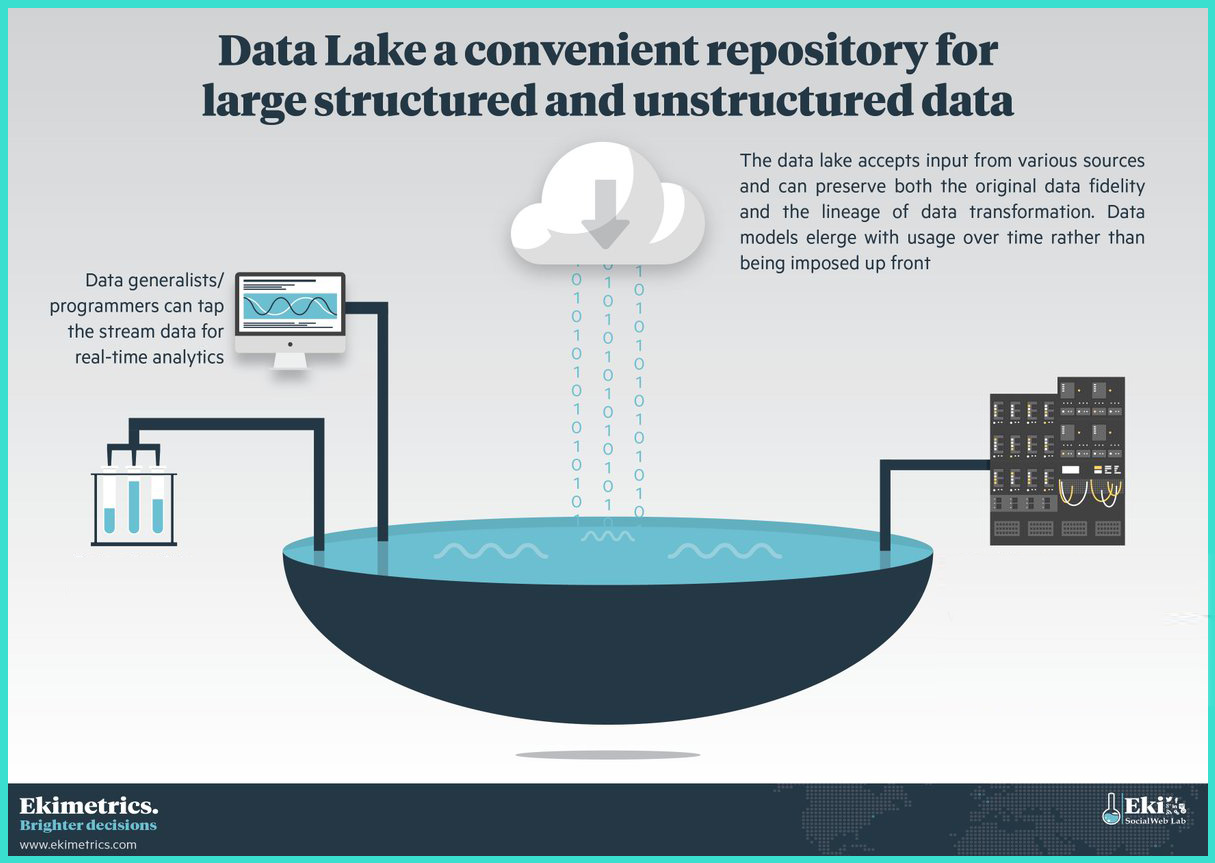

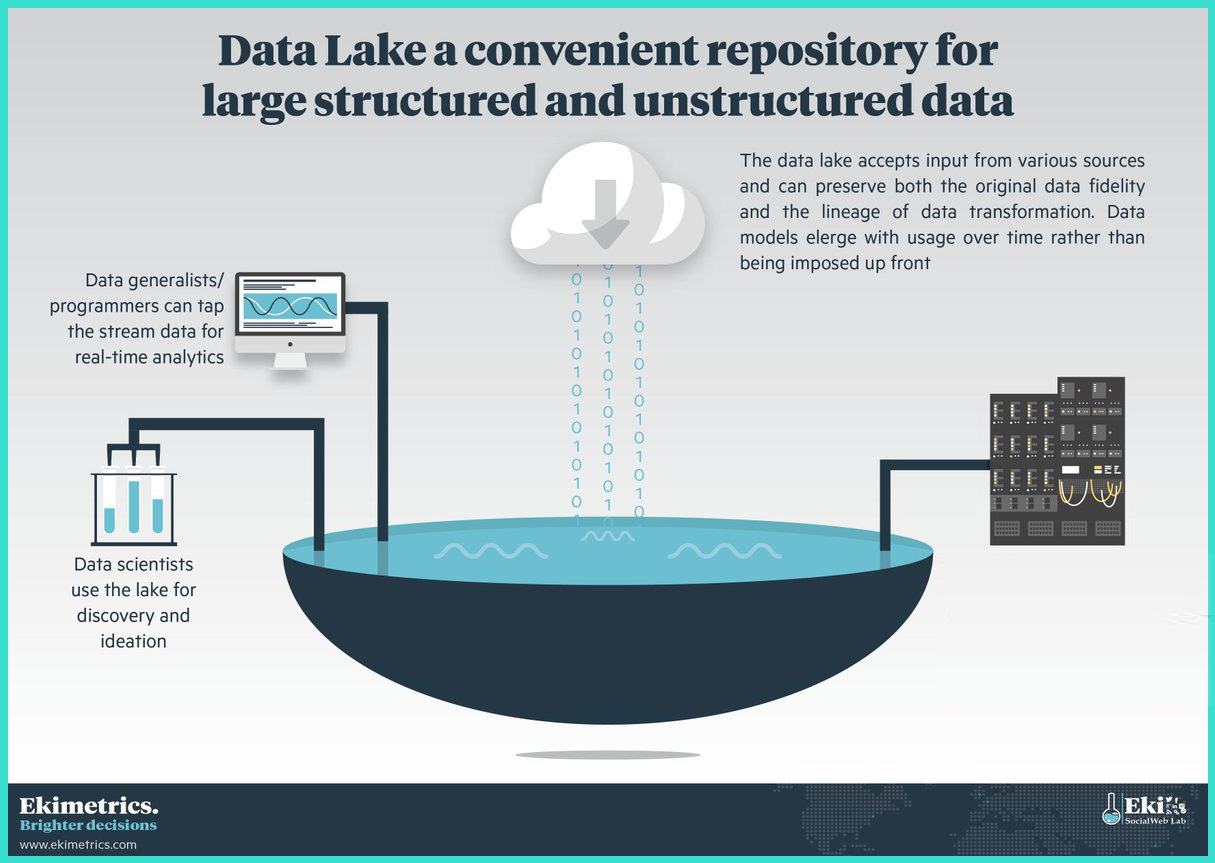

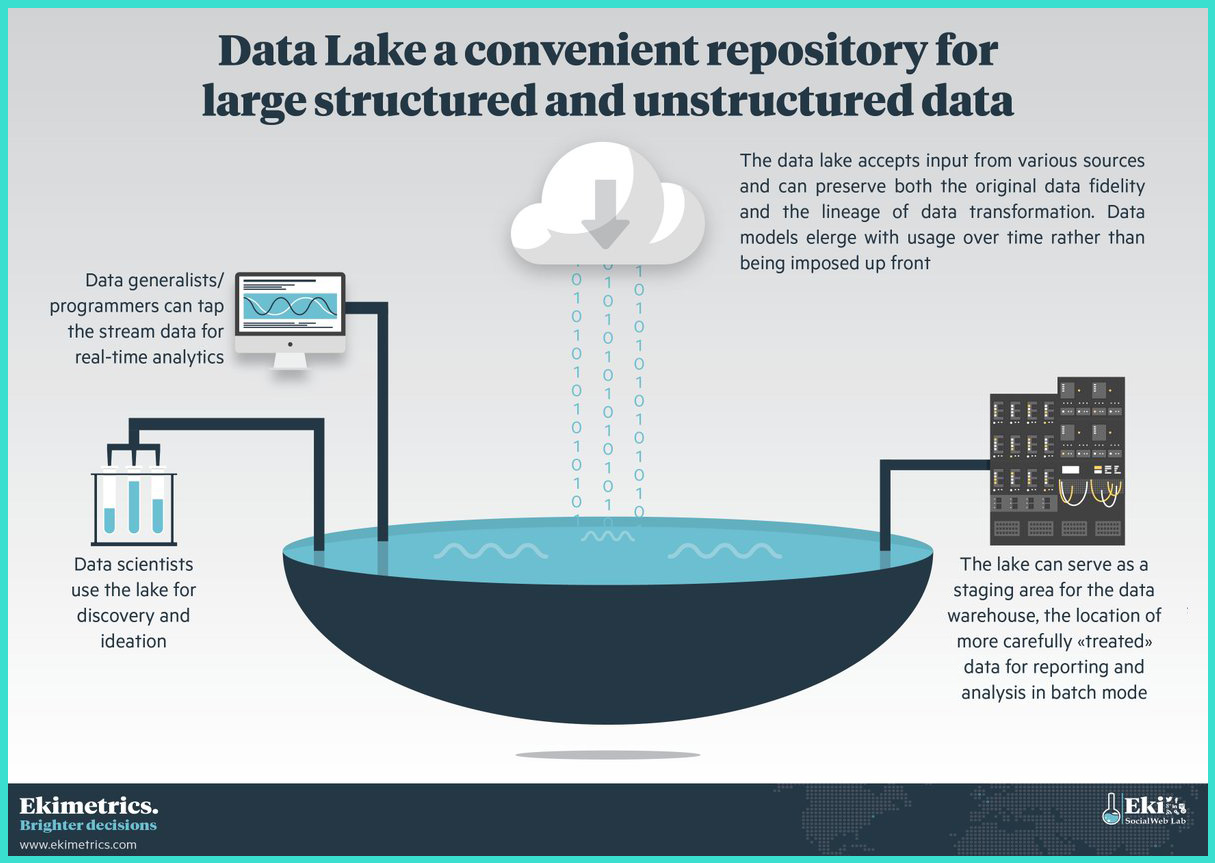

The idea of breeding data really came about when we considered so-called data lakes. Data lakes are like holding bins, huge data storage units. Data breeding would allow you to get the most out of the data held in the data lakes; if you think about ‘living data,’ you could actually breed data to create higher-quality data items, fix errors, be more exact and helpful, that sort of thing. For example, let’s say you wanted to make sure two medicines didn’t interact in a harmful way if taken at the same time. You want your engine to be as accurate as possible, because the quality of the data is super-important, possibly a matter of life and death. Data breeding can take data distributed in the data lakes and help you judge the quality of the data sets.

So, when the answer comes back and says, you know, no, stop, these two drugs will not interact favorably, they’re terrible for the person, you’d be able to trust the answer now better than you could earlier?

Exactly. No algorithm’s perfect, though. It doesn’t mean the data is perfect, but it’s more likely to give you the right answer. And we’ll be creating better engines that can answer questions, and also answer the ‘why question,’ why do you do that? That’s really important.

Thank you for sharing your insights, Axel!